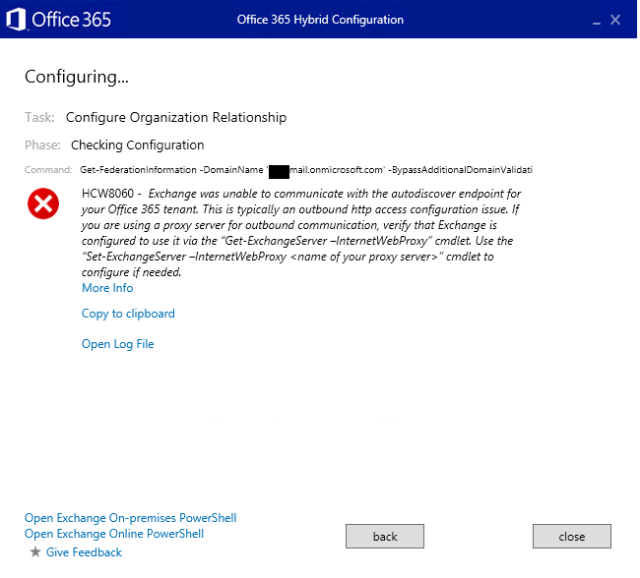

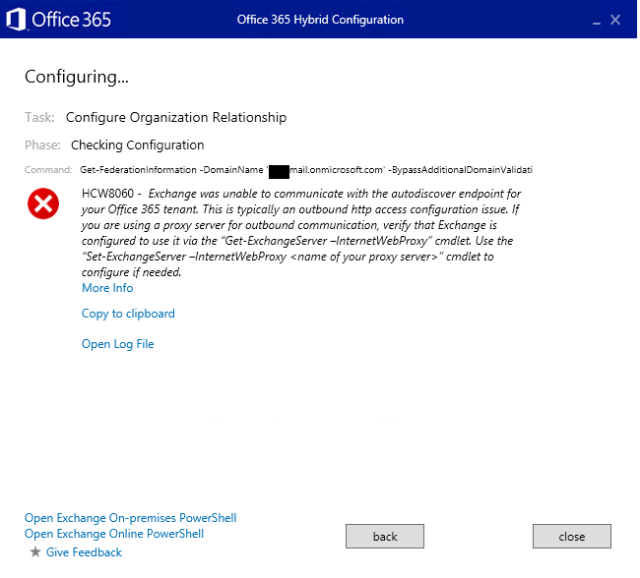

Recently I ran into a problem preparing for the April 15th TLS Cert Update from Microsoft. We would get to a point in the Hybrid Configuration Wizard and it would fail to connect in a spectacular fashion:

You can see that it’s saying that it can’t hit the autodiscover endpoint. If you open up the log file, you can find the following lines by searching for “get-federationinformation”:

2016.04.08 16:18:11.627 [Workflow=Hybrid, Task=OrganizationRelationship, Phase=CheckConfiguration] START

2016.04.08 16:18:11.657 [Session=OnPremises, Cmdlet=Get-FederationInformation] START Get-FederationInformation -DomainName '<tenantID>.mail.onmicrosoft.com' -BypassAdditionalDomainValidation: $true

2016.04.08 16:18:22.974 *ERROR* [Provider=OnPremises] Error Record: {CategoryInfo={Activity=Get-FederationInformation,Category=1001,Reason=GetFederationInformationFailedException,TargetName=,TargetType=},ErrorDetails=,Exception=Federation information could not be received from the external organization.,FullyQualifiedErrorId=[Server=nope,RequestId=nope,TimeStamp=4/8/2016 4:18:22 PM] [FailureCategory=Cmdlet-GetFederationInformationFailedException] D3A3CDB8,Microsoft.Exchange.Management.SystemConfigurationTasks.GetFederationInformation}

2016.04.08 16:18:23.000 *ERROR* [Session=OnPremises, Cmdlet=Get-FederationInformation] FINISH Time=11.3s Results=PowerShell failed to invoke 'Get-FederationInformation': Federation information could not be received from the external organization.

2016.04.08 16:18:23.050 *ERROR* [Workflow=Hybrid, Task=OrganizationRelationship, Phase=CheckConfiguration] Microsoft.Online.CSE.Hybrid.Engine.TaskException: Task 'OrganizationRelationship' failed during phase 'CheckConfiguration': Get-FederationInformation -DomainName '<tenantID>.mail.onmicrosoft.com' -BypassAdditionalDomainValidation: $true Errors Exchange was unable to communicate with the autodiscover endpoint for your Office 365 tenant. This is typically an outbound http access configuration issue. If you are using a proxy server for outbound communication, verify that Exchange is configured to use it via the “Get-ExchangeServer –InternetWebProxy” cmdlet. Use the “Set-ExchangeServer –InternetWebProxy <name of your proxy server>” cmdlet to configure if needed. ---> Microsoft.Online.CSE.Hybrid.Engine.WorkflowException: HCW8060 https://support.office.com/article/Office-365-URLs-and-IP-address-ranges-8548a211-3fe7-47cb-abb1-355ea5aa88a2 Exchange was unable to communicate with the autodiscover endpoint for your Office 365 tenant. This is typically an outbound http access configuration issue. If you are using a proxy server for outbound communication, verify that Exchange is configured to use it via the “Get-ExchangeServer –InternetWebProxy” cmdlet. Use the “Set-ExchangeServer –InternetWebProxy <name of your proxy server>” cmdlet to configure if needed.

at Microsoft.Online.CSE.Hybrid.StandardWorkflow.OrganizationRelationshipTask.get_OnpremisesFederationInfo()

at Microsoft.Online.CSE.Hybrid.StandardWorkflow.OrganizationRelationshipTask.NeedsConfiguration()

at Microsoft.Online.CSE.Hybrid.Engine.Engine.ExecutePhase(ILogger logger, TaskPhase phase, IWorkflow workflow, ITask task, Func`3 phaseFunction, Boolean throwOnFalse)

--- End of inner exception stack trace ---

at Microsoft.Online.CSE.Hybrid.Engine.Engine.ExecutePhase(ILogger logger, TaskPhase phase, IWorkflow workflow, ITask task, Func`3 phaseFunction, Boolean throwOnFalse)

at Microsoft.Online.CSE.Hybrid.Engine.Engine.ExecuteTask(ILogger logger, IWorkflow workflow, ITask task)

2016.04.08 16:18:23.052 *ERROR* [Workflow=Hybrid, Task=OrganizationRelationship, Phase=CheckConfiguration] FINISH Time=11.4s Results=PASSED

2016.04.08 16:18:23.053 *ERROR* [Workflow=Hybrid, Task=OrganizationRelationship] FINISH Time=12.7s Results=FAILED

2016.04.08 16:18:23.054 *ERROR* [Workflow=Hybrid] FINISH Time=17.4s Results=FAILED

This seems pretty weird given that I was running this tool on our hybrid nodes, which have the appropriate firewall rules to go to the required O365 URLs.

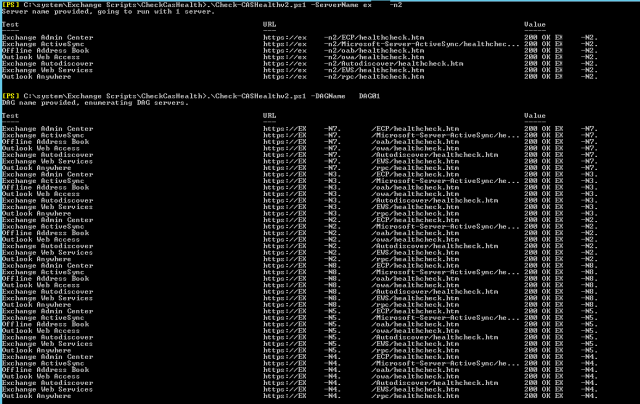

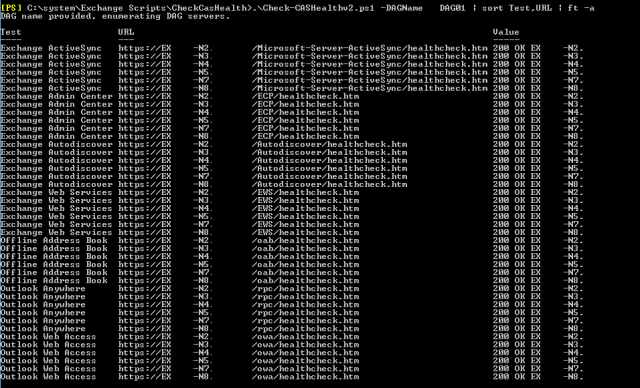

So I took the command from the logs, and opened up a PowerShell instance with Chris Lehr’s tool, ran the command, and got back something interesting:

PS C:\system\Install-PowerShellOptions-1.5> Get-FederationInformation -DomainName 'contoso.mail.onmicrosoft.com' -BypassAdditionalDomainValidation: $true

Creating a new session for implicit remoting of "Get-FederationInformation" command...

Federation information could not be received from the external organization.

+ CategoryInfo : NotSpecified: (:) [Get-FederationInformation], GetFederationInformationFailedException

+ FullyQualifiedErrorId : [Server=<Not-the-Hybrid-Node FQDN>,RequestId=05b20ab1-ea19-4c4c-9356-c24145e96cc8,TimeStamp=4/12/2016 6:0

6:23 PM] [FailureCategory=Cmdlet-GetFederationInformationFailedException] D3A3CDB8,Microsoft.Exchange.Management.S

ystemConfigurationTasks.GetFederationInformation

+ PSComputerName : <Hybrid Node FQDN>

I ended up opening a case with Microsoft about this, and the engineer and I looked for a few days thinking that it was a problem with the firewall, but all of our tests from our Hybrid node worked…except for this command.

What ended up happening was a multifaceted problem, but it has root causes in 2 things:

- We are on Exchange 2013 CU11 (we typically run 1 CU behind the latest to allow for community testing).

- We recently upgraded our firewalls to new, shiny ones.

In Exchange 2013 CU11, Microsoft introduced a feature called mailbox anchoring for remote powershell instances. This meant that any time you connected to powershell, the account you used determined the server that the commands would actually be run from, which means that if your admin level account has a mailbox hosted on server A, and you connect to server B’s powershell, it will proxy the commands you run to Server A. Microsoft received some feedback and by the time CU12 was released, they reversed this change.

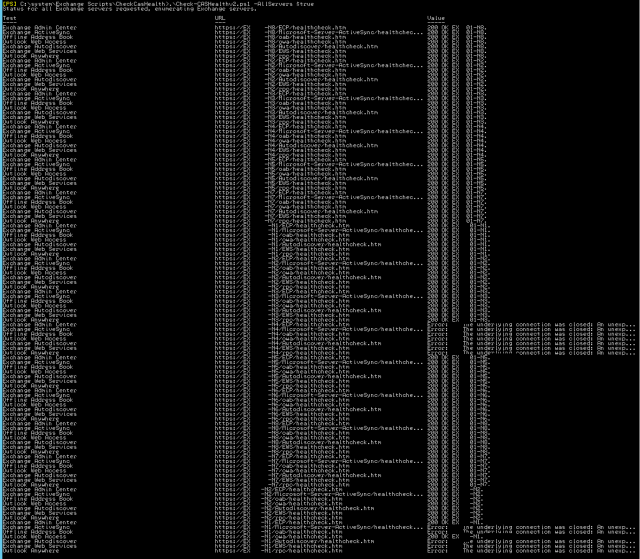

This meant that our new firewalls, which were implemented in such a way that it locked everything down tight as could be, were not ready for outbound calls from our mail cluster that serves our on-premise users, and were failing to get the results of the get-federationinformation command, which was basically trying to get to the autodiscover-s.outlook.com url.

It took us a few days to string this series of problems together and come up with the very simple solution of allowing the on-premise cluster nodes to access the O365 urls, at which point the Hybrid Configuration Wizard worked like a champ.

We’ll be rolling out CU12 within the coming weeks to handle the mailbox anchoring issue.